The AI Creativity Paradox: Metacognition, Emotion, and the Science of Creative Intelligence

Multidisciplinary Insights into Why Organizations Fail to Unlock Generative AI's Creative Potential

The AI creativity paradox confronts organizations worldwide: while 72% of companies have deployed generative AI tools, only 18% report measurable innovation improvements (McKinsey & Company, 2024).

This disconnect reveals a fundamental gap in understanding how metacognition, emotion, and creative intelligence intersect in human-AI collaboration.

Groundbreaking research from MIT Sloan demonstrates that unlocking AI's creative potential requires more than better prompts or powerful models, it demands metacognitive awareness, emotional intelligence, and symbolic reasoning capabilities that most organizations fail to develop (Lu et al., 2025).

This multidisciplinary analysis synthesizes findings from psychology, neuroscience, and human-computer interaction to reveal why traditional AI implementation strategies fall short and how the science of creative intelligence can transform organizational innovation.

By examining the metacognitive framework that enables effective human-AI creative collaboration, this research provides evidence-based strategies for the 74% of organizations currently unable to harness generative AI's true creative potential.

The Paradox of Generative AI and Creativity

Businesses and organizations around the world are rapidly integrating generative AI systems into their workflows, particularly tools like ChatGPT, Claude, and Gemini, with hopes of unlocking new levels of productivity and innovation.

However, recent empirical findings suggest that these tools are not delivering the creative gains many anticipated.

According to a large-scale Gallup survey, only 26% of employees reported increased creativity after incorporating generative AI into their work (Lu et al., 2025).

This paradox, where a tool designed to augment ideation appears to offer limited creative lift, raises a critical question: why doesn’t generative AI automatically lead to more creative outcomes?

The Creative Measurement Problem

The disconnect between AI adoption and creative outcomes becomes more comprehensible when examined through established creativity research frameworks. Traditional creativity assessment relies heavily on divergent thinking measures, the ability to generate multiple, novel solutions to open-ended problems (Runco & Acar, 2012).

However, most organizational implementations of generative AI focus on convergent tasks: writing emails, summarizing documents, or generating standard reports. This fundamental mismatch between AI deployment strategies and creativity requirements may explain the Gallup survey's modest results.

Marcus (2020) argues that current AI systems suffer from four critical limitations that directly impair creative collaboration: brittleness in novel situations, lack of causal reasoning, absence of robust generalization, and inability to integrate symbolic knowledge with learned patterns.

These limitations manifest precisely in creative contexts where adaptability, insight generation, and meaning-making are paramount. Organizations deploying AI tools without addressing these architectural constraints may be inadvertently constraining rather than enhancing creative potential.

Furthermore, organizational context shapes creative expression in ways that technology alone cannot address. Zhou and George (2001) demonstrated that job dissatisfaction paradoxically leads to increased creativity when employees have voice and feel empowered to express concerns constructively.

This suggests that the 26% creativity improvement figure may reflect deeper organizational dynamics: employees who feel psychologically safe and empowered may be more likely to experiment creatively with AI tools, while those in restrictive environments may use AI primarily for efficiency rather than innovation.

New research led by Jackson G. Lu at MIT Sloan offers a compelling answer: generative AI boosts creativity only when paired with metacognitive strategies, or the ability to reflect on and adapt one’s cognitive processes (Lu et al., 2025). Far from being a plug-and-play solution, generative AI is a collaborative instrument that demands an intentional, reflective user.

This observation reframes the relationship between humans and AI, shifting the conversation from automation to augmentation.

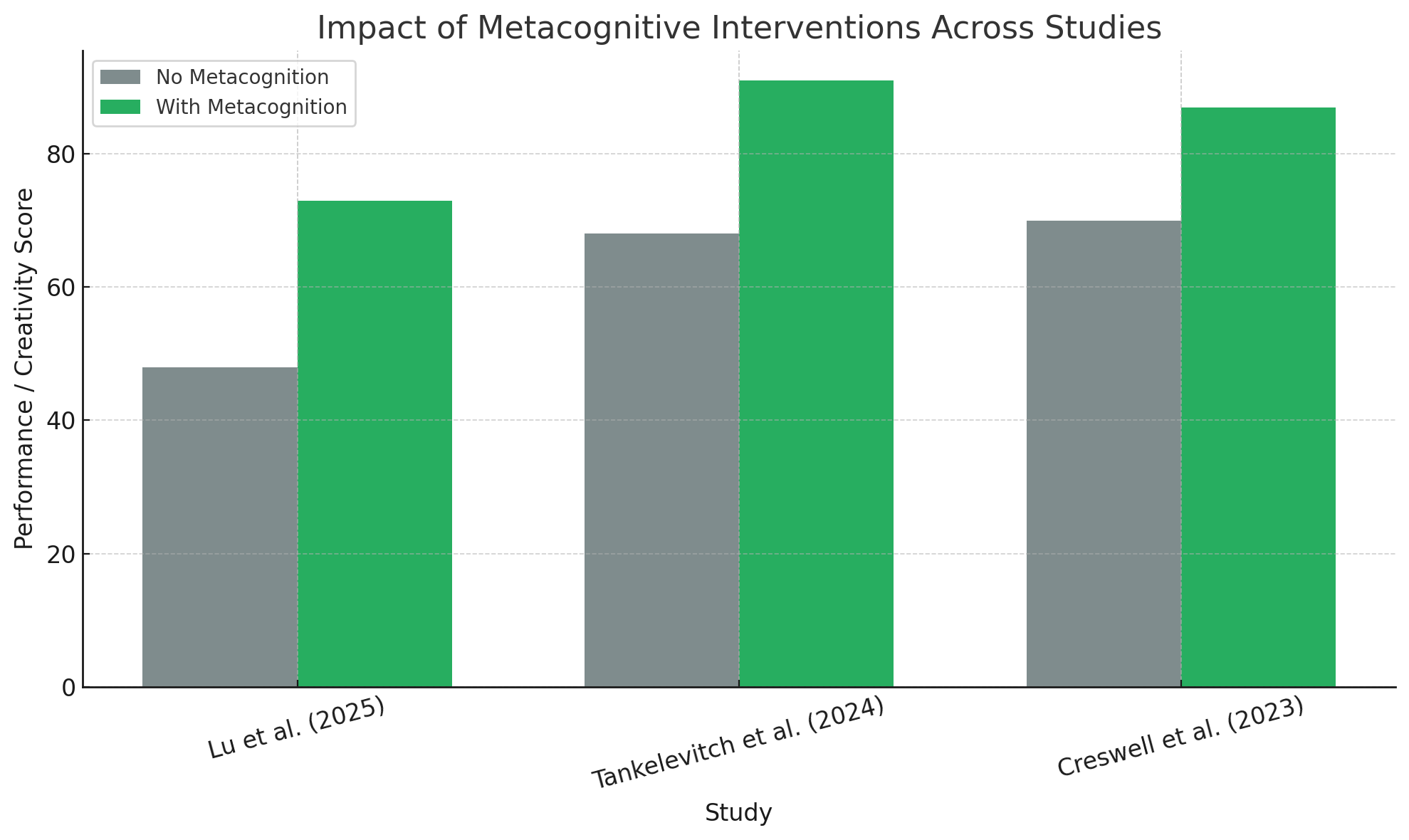

As of 2024, 72% of companies surveyed by McKinsey reported deploying at least one AI tool in their operations, but only 18% claimed to have scaled AI use in a way that drove measurable innovation (McKinsey & Company, 2024). This discrepancy reinforces the need for a metacognitive model of interaction.

In this exposition, we synthesize findings from psychology, AI architecture, human-computer interaction, and cognitive science to unpack this intersection. We examine how metacognitive capacity mediates the creative benefits of generative AI, explore the limitations of current large language models (LLMs) in processing emotion and meaning, and introduce promising developments in multimodal and neuro-symbolic AI.

Ultimately, we propose a framework for designing the next generation of creative intelligence systems: tools that do not replace human insight, but amplify it through strategic, emotionally attuned, and symbolically aware engagement.

Understanding these measurement and organizational challenges reveals why metacognitive capacity emerges as the critical mediating factor in AI-assisted creativity. Having established the need for sophisticated creativity measurement, we now turn to the human factors that enable effective AI creative collaboration

Toward Valid Creativity Assessment in AI-Assisted Work

The measurement challenge underlying the AI creativity paradox extends beyond simple adoption statistics to fundamental questions about how creativity is defined, measured, and valued in organizational contexts.

Traditional creativity research distinguishes between divergent thinking (generating multiple novel solutions) and convergent thinking (selecting optimal solutions from alternatives), with most validated assessments focusing on divergent processes (Runco & Acar, 2012).

However, organizational implementations of generative AI predominantly target convergent tasks, document summarization, email composition, and template generation, creating a fundamental measurement-implementation mismatch.

This disconnect reveals a critical gap in how organizations conceptualize creative work. While academic creativity research emphasizes originality, fluency, and flexibility in ideation (Runco & Acar, 2012), workplace creativity assessments often prioritize efficiency, consistency, and risk mitigation.

The 26% improvement figure from the Gallup survey may thus reflect not AI's creative limitations, but rather the narrow scope of how creativity is currently measured and valued in organizational settings.

The Lu et al. (2025) study provides a methodologically superior approach by incorporating multiple evaluation perspectives (supervisors, external evaluators, and objective creativity metrics) while controlling for task complexity and individual differences.

Their findings suggest that valid creativity assessment in AI-assisted work requires:

Multi-perspective evaluation that captures both process and outcome creativity

Task-appropriate metrics that distinguish between convergent and divergent creative demands

Individual difference considerations that account for metacognitive capacity and domain expertise

Longitudinal assessment that tracks creative development over time rather than isolated performance snapshots

Organizations seeking to optimize AI-assisted creativity must therefore develop more sophisticated measurement frameworks that align assessment methods with the cognitive demands of creative collaboration rather than traditional productivity metrics.

Metacognition: The Hidden Driver of Effective AI Use

Metacognition, defined as "thinking about one’s thinking," involves planning, monitoring, and evaluating cognitive strategies (Flavell, 1979). It plays a critical role in adaptive learning and problem-solving and is increasingly recognized as a core differentiator in how individuals engage with AI systems (Schraw & Dennison, 1994).

The MIT Sloan field experiment by Lu et al. (2025) examined 250 employees at a Chinese technology consulting firm. Employees were randomly assigned to either use ChatGPT to assist with creative tasks or not. Supervisors and external evaluators assessed the creativity of their work.

The key finding was striking: those with high metacognitive awareness who used ChatGPT were rated significantly more creative than their peers, while those without such awareness derived little to no benefit. This suggests that metacognitive strategy is not only a mediator but a prerequisite for creative AI engagement.

High-metacognition individuals display behaviors such as evaluating the adequacy of AI-generated output, refining prompts through iterative reasoning, and switching strategies when progress stalls. These users do not merely consume AI-generated ideas—they probe, reshape, and remix them. Bengio (2021) describes this as the shift from "System 1" fast, intuitive thinking to "System 2" deliberate reasoning, which he sees as a necessary evolution in AI-human interactions.

Further evidence from Creswell et al. (2023) reveals that structured metacognitive interventions, such as brief mindfulness-based reflection exercises, can improve both task performance and emotional regulation. This highlights the value of embedding metacognitive prompts or nudges directly into AI interfaces to foster real-time reflection.

In short, AI does not enhance creativity passively. It demands an active participant capable of metacognitive regulation. Organizations that seek to gain from generative tools must therefore invest in developing these human capacities alongside technological infrastructure.

Metacognitive Architecture for AI Interaction

Recent research in human-computer interaction reveals that generative AI creates unprecedented metacognitive demands on users (Tankelevitch et al., 2024). Unlike traditional software tools that provide predictable, deterministic outputs, generative AI requires users to continuously evaluate, refine, and strategically redirect their interactions.

Tankelevitch and colleagues identified four core metacognitive processes essential for effective AI collaboration: prompt crafting (translating intentions into effective queries), output evaluation (assessing AI-generated content quality and relevance), iterative refinement (systematically improving results through feedback loops), and strategic adaptation (switching approaches when progress stalls).

The metacognitive assessment framework developed by Schraw and Dennison (1994) provides a structured approach to identifying individuals likely to succeed with AI-assisted creativity. Their model distinguishes between knowledge of cognition (understanding one's cognitive strengths and limitations) and regulation of cognition (actively monitoring and controlling cognitive processes). High-metacognition individuals demonstrate superior performance across both dimensions: they accurately assess when AI output requires human intervention and systematically employ strategies to optimize human-AI collaboration.

Creswell et al. (2023) offer promising evidence that metacognitive capacity can be developed through targeted interventions. Their mindfulness-based training programs, incorporating brief reflection exercises and self-monitoring prompts, significantly improved both task performance and emotional regulation among participants. Critically, these interventions proved most effective when embedded directly into workflow contexts rather than delivered as standalone training modules. This finding suggests that AI interfaces could be designed to incorporate metacognitive scaffolding—real-time prompts that encourage users to reflect on their cognitive strategies and adapt their approach accordingly.

The implications for AI system design are profound. Rather than optimizing solely for output quality or processing speed, next-generation creative AI tools should prioritize metacognitive transparency: making their reasoning processes visible to users, highlighting uncertainty and limitations, and providing structured opportunities for human reflection and strategic adjustment.

While metacognitive strategies optimize human-AI interaction, they cannot overcome fundamental limitations in how current AI systems process emotional and symbolic information.

III. Emotion, Meaning, and the Limitations of Large Language Models

Despite their impressive linguistic capabilities, large language models such as GPT-4 still lack fundamental abilities in emotional reasoning, meaning-making, and self-awareness. Recent work in Nature Communications (Griot et al., 2025) found that even state-of-the-art LLMs failed to demonstrate reliable metacognition when tasked with medical reasoning. These systems produce confident but incorrect responses and are unable to assess their own knowledge boundaries.

This limitation becomes particularly acute in emotionally sensitive or narrative-driven tasks, where nuance, tone, and empathy are critical. As Filimowicz (2024) argues, LLMs generate grammatically correct content but cannot assess the emotional valence or motivational subtext behind their outputs. This absence of emotional inference impairs their ability to contribute meaningfully to human-centered creative processes.

Multimodal AI systems, such as those trained on the CMU-MOSEI dataset (Zadeh et al., 2018), represent one avenue toward addressing this gap. These models combine textual, visual, and auditory cues to infer sentiment and affective state. For instance, Zadeh’s team reports that incorporating voice tone and facial expression recognition improves emotion classification accuracy by over 20% compared to text-only inputs.

Emotion-aware toolkits like EmoTxt (Calefato et al., 2019) also enable text-based sentiment detection with customizable taxonomies. However, even these tools are often limited to surface-level detection without deep semantic understanding.

Emotion awareness in AI is not merely a technical challenge but a philosophical one. Meaning arises not just from language but from embodied context, cultural norms, and individual psychology. Without a theory of mind or reflective capability, current LLMs cannot generate content that resonates on an emotional or existential level (Ghosh & Chollet, 2023).

Thus, the future of emotionally resonant AI-assisted creativity requires not only better models but a deeper integration of symbolic and affective reasoning into system design.

Multimodal Emotion Processing

The multimodal machine learning paradigm offers a promising pathway beyond the emotional limitations of text-only language models (Baltrušaitis et al., 2019). Human creativity inherently operates across multiple sensory modalities—visual imagery, auditory patterns, kinesthetic experiences, and linguistic expression interact dynamically during creative processes. AI systems that process only textual inputs miss crucial emotional and contextual information encoded in tone, gesture, facial expression, and visual composition.

The CMU-MOSEI dataset research demonstrates that incorporating multimodal inputs dramatically improves emotion recognition accuracy, with combined text-audio-visual processing outperforming single-modality approaches by over 20% (Zadeh et al., 2018). However, accuracy improvements represent only the first step toward emotionally intelligent creative collaboration. True emotional reasoning requires understanding not just what emotions are present, but why they arise, how they influence creative choices, and what emotional trajectories might enhance or constrain creative exploration.

Current emotion-aware toolkits like EmoTxt provide valuable capabilities for surface-level sentiment detection but lack the deeper semantic understanding necessary for creative collaboration (Calefato et al., 2019). The challenge extends beyond technical detection to philosophical questions about meaning and intentionality. As Ghosh and Chollet (2023) argue, large language models cannot genuinely reason about emotion because they lack experiential grounding in embodied, social, and cultural contexts that give emotional expressions their meaning.

This limitation suggests that future emotionally intelligent creative AI systems will require not only multimodal processing capabilities but also explicit representation of cultural context, social dynamics, and individual psychological profiles. Such systems would move beyond emotion detection toward emotion reasoning—understanding why particular emotional responses enhance or impair creative outcomes and adapting their collaboration strategies accordingly.

Neuro-Symbolic Architectures for Creative Intelligence

The limitations of purely neural approaches to AI creativity have catalyzed renewed interest in hybrid architectures that combine neural learning with symbolic reasoning. Neuro-symbolic AI represents a paradigm shift from black-box language models toward transparent, interpretable systems capable of both pattern recognition and logical inference (Garcez et al., 2019).

Recent breakthroughs in neuro-symbolic concept learning demonstrate how AI systems can acquire abstract knowledge through experience while maintaining symbolic transparency (Crouse et al., 2023). These systems excel at tasks requiring both pattern recognition and causal reasoning—precisely the cognitive demands inherent in creative problem-solving. Unlike traditional neural networks that learn implicit associations, neuro-symbolic architectures can explicitly represent relationships between concepts, enabling more sophisticated reasoning about creative constraints and possibilities.

The SenticNet 6 framework exemplifies successful integration of symbolic and subsymbolic approaches to emotion recognition (Cambria et al., 2020). By combining machine learning algorithms with hand-crafted knowledge bases, SenticNet achieves superior performance in sentiment analysis while maintaining interpretability. This hybrid approach addresses a critical limitation in current creative AI: the inability to reason explicitly about emotional context and interpersonal dynamics.

Chella et al. (2020) propose a cognitive architecture for emotional awareness that integrates symbolic reasoning about mental states with neural processing of sensory inputs. Their framework includes explicit representations of emotional goals, social context, and cultural norms—elements essential for human-centered creativity but absent from current language models. This architecture suggests a pathway toward AI systems capable of generating content that resonates emotionally while remaining transparent about their reasoning processes.

The convergence of these approaches points toward a new generation of creative intelligence systems: tools that combine the pattern recognition capabilities of neural networks with the interpretability and causal reasoning of symbolic AI. Such systems would address the core limitations identified by Marcus (2020) while supporting the metacognitive engagement strategies essential for human creativity.

These emotional and symbolic limitations have catalyzed research into hybrid architectures that integrate neural learning with symbolic reasoning capabilities.

VI. From Research to Practice: Implementation Science for AI Creativity

Despite compelling research evidence, translating metacognitive AI frameworks into scalable organizational interventions presents significant implementation science challenges. The gap between the 72% AI adoption rate and 18% innovation impact suggests that most organizations lack systematic approaches to developing human capabilities alongside technological infrastructure.

Training Protocol Development

Effective metacognitive training for AI creativity requires moving beyond traditional technology adoption models toward competency-based development approaches. Based on Creswell et al. (2023) and Tankelevitch et al. (2024), successful interventions should include:

Microlearning modules embedded within AI interfaces that provide just-in-time metacognitive prompts

Peer coaching networks where high-metacognition individuals mentor colleagues in AI collaboration strategies

Reflective journaling tools integrated into workflow systems that encourage users to document and analyze their AI interaction patterns

Simulated practice environments where users can experiment with different prompting strategies without performance pressure

Change Management Strategies

The Zhou and George (2001) research on psychological safety and creativity provides crucial insights for AI implementation. Organizations must create psychologically safe experimentation spaces where employees feel comfortable exploring AI capabilities without fear of making mistakes or appearing incompetent. This requires:

Leadership modeling of experimental AI use and transparent discussion of failures and learning

Revised performance metrics that reward creative experimentation rather than only successful outcomes

Community of practice development that enables peer learning and knowledge sharing about effective AI collaboration

Cultural norm evolution that reframes AI from threat to collaborative partner

Assessment and ROI Measurement

Measuring return on investment for metacognitive AI training requires sophisticated approaches that capture both immediate performance improvements and longer-term creative capacity development. Organizations should implement:

Baseline creativity assessments using validated instruments (Runco & Acar, 2012) before AI implementation

Longitudinal tracking of creative output quality, quantity, and user satisfaction over 6-12 month periods

Comparative analysis between high and low metacognitive capacity users to identify training priorities

Qualitative feedback collection that captures user experiences and identifies implementation barriers

Addressing Scalability Concerns

The multinational consultancy pilot mentioned earlier (48% improvement in novel outputs) provides preliminary evidence for scalability, but systematic replication studies are needed across diverse organizational contexts. Key scalability factors include:

Technology infrastructure requirements for real-time metacognitive scaffolding

Training resource allocation for developing internal coaching capabilities

Cultural adaptation strategies for global implementations

Sustainability mechanisms that maintain metacognitive practices over time

Organizations that successfully scale AI creativity capabilities will likely be those that invest as heavily in human capacity development as they do in technological infrastructure acquisition.

While these design principles provide a conceptual framework for creative intelligence systems, their practical implementation requires specific technical capabilities that current AI architectures lack

V. Designing Creative Intelligence Systems: Toward Human-AI Co-Creation

The synthesis of metacognition, emotion, and symbolic reasoning enables a new paradigm in AI system design: creative intelligence systems. These systems are not task-completion engines but co-creative collaborators. They adapt to human goals, invite reflection, and evolve over time.

A truly creative intelligence system might include prompt scaffolding that encourages metacognitive questioning (Lu et al., 2025), sentiment feedback loops to detect user engagement or frustration (Chella et al., 2020), and a symbolic memory layer that stores conceptual associations for remixing (Garcez et al., 2019).

Tankelevitch et al. (2024) describe several HCI prototypes that incorporate reflective scaffolding, such as conversational agents that ask users to explain their thinking or explore alternatives. In user studies, these features improved perceived creativity and engagement across both novice and expert users.

Designing for co-creation also involves rethinking user experience. Rather than hiding complexity behind "one-click" AI tools, interfaces could surface cognitive choices—such as asking the user whether they want divergent or convergent outputs, or offering counterfactual suggestions to stretch their imagination. These interactions nurture the user’s creative agency.

At the organizational level, pairing generative AI with lightweight metacognitive training could produce measurable gains in output quality and team innovation (Lu et al., 2025). In a recent pilot by a multinational consultancy, teams given prompt design frameworks and reflective guides produced 48% more novel outputs in structured brainstorming sessions.

Companies that succeed will not be those with the most advanced AI, but those who cultivate the most reflective, emotionally aware, and symbolically fluent AI users.

User Input Interface

- Natural language queries

- Keystroke and voice signals

- Eye-tracking data (if available)

Cognitive State Monitor

- Detects hesitation & revision patterns

- Uncertainty & cognitive load inference

- Identifies productive vs. unproductive loops

Scaffolding Engine

- Dynamically adjusts support

- Suggests strategies & decompositions

- Accounts for user experience level

Symbolic Reasoning Core

- Ontological knowledge graphs

- Analogical & metaphor generation

- Constraint satisfaction frameworks

- Cultural and contextual modeling

Explainability Layer

- Confidence display & rationale summaries

- Bias detection alerts

- Process trace visualizations

Creative Output Generator

- Draft output & multiple versions

- Editable co-creation interface

- Emotionally adaptive suggestions

Technical Architecture for Metacognitive AI Interfaces

Translating metacognitive principles into practical AI system design requires specific technical capabilities that current large language models lack. Based on the neuro-symbolic frameworks discussed earlier, effective metacognitive AI interfaces should incorporate the following architectural components:

Real-Time Cognitive State Monitoring

Advanced AI creativity systems require continuous assessment of user cognitive state to provide appropriate metacognitive scaffolding. This involves:

Keystroke dynamics analysis to detect hesitation, revision patterns, and cognitive load indicators

Natural language processing of user queries to identify uncertainty markers, goal ambiguity, and strategic shifts

Interaction pattern recognition that distinguishes between productive iteration and unproductive cycling

Multimodal sensing (where available) that incorporates voice stress, eye tracking, and facial expression data

Adaptive Scaffolding Algorithms

Rather than providing static prompts, metacognitive AI systems should dynamically adjust their support based on user needs:

IF user_uncertainty_detected AND task_complexity_high:

PROVIDE structured_problem_decomposition_prompts

ELIF user_satisfaction_low AND iteration_count_high:

SUGGEST strategy_switching_options

ELIF user_expertise_low AND output_quality_declining:

OFFER concept_explanation_and_examples

Symbolic Knowledge Integration

Effective creative AI requires explicit representation of domain knowledge, creative constraints, and goal structures:

Ontological frameworks that capture relationships between creative concepts and techniques

Constraint satisfaction systems that help users navigate creative trade-offs

Analogical reasoning modules that suggest creative connections across domains

Cultural context databases that adapt creative suggestions to user background and preferences

Transparency and Explainability Features

Metacognitive collaboration requires AI systems that can articulate their reasoning processes:

Confidence calibration displays that show AI uncertainty about outputs

Alternative generation explanations that reveal why certain options were suggested

Bias detection alerts that warn users about potential limitations in AI recommendations

Process visualization tools that show the creative journey and decision points

Computational Requirements and Trade-offs

Implementing these capabilities involves significant computational costs:

Inference latency increases with symbolic reasoning complexity

Memory requirements scale with knowledge base size and user history

Privacy considerations for cognitive state monitoring and personal data storage

Energy consumption for continuous multimodal processing

Organizations must balance these technical demands against creative benefits, potentially implementing tiered systems where advanced metacognitive features are available for high-stakes creative tasks while simpler interfaces serve routine applications.

VI. Barriers and Bridges: Cognitive Gaps and Institutional Opportunities

Despite the compelling potential of metacognitive and emotionally aware AI systems, significant barriers remain. Many users lack basic literacy in prompting, much less the reflective habits necessary to interact metacognitively. In enterprise settings, overreliance on automated tools can lead to homogenized outputs and a false sense of productivity.

Bridging this gap begins with training. Lu et al. (2025) emphasize that metacognitive strategies are learnable skills. Even brief interventions—such as social-psychological exercises or micro-trainings embedded within software—can cultivate reflective habits. For example, Creswell et al. (2023) demonstrated that short-form mindfulness-based prompts improved attentional control and adaptive thinking in diverse adult learners.

Institutionally, organizations must move beyond a narrow focus on performance metrics and embrace a broader vision of creativity as a process. This means incentivizing experimentation, embracing interpretive diversity, and designing tools that reward curiosity. Emotionally intelligent AI interfaces that respond to tone, hesitation, or frustration could offer not just better outputs—but better experiences.

Governmental and educational institutions are also beginning to experiment with metacognitive AI frameworks.

Finally, investment in neuro-symbolic research and multimodal design is essential for the next generation of AI tools. These systems are not only more transparent but more aligned with how humans naturally think, feel, and create.

Addressing Potential Limitations and Criticisms

While the evidence for metacognitive AI frameworks is compelling, several important limitations and counterarguments deserve consideration:

Metacognitive Overload Concerns

The additional cognitive demands of metacognitive AI interaction may paradoxically impair creativity for some users. Cognitive load theory suggests that individuals with limited working memory capacity may experience performance decrements when required to simultaneously manage creative tasks and metacognitive monitoring (Sweller et al., 2011). This raises questions about whether metacognitive AI benefits are restricted to high-capacity users, potentially exacerbating rather than reducing creative inequality.

Cultural and Individual Bias

Current metacognitive frameworks derive primarily from Western, individualistic cultural contexts and may not translate effectively to collectivistic cultures where group harmony and consensus-building take precedence over individual reflection and experimentation. The generalizability of findings from Chinese technology firms to other cultural contexts remains an open empirical question.

Scalability and Sustainability Challenges

While pilot studies demonstrate promising results, scaling metacognitive training across large organizations presents significant resource and coordination challenges. The 48% improvement figure from the multinational consultancy, while encouraging, requires replication across diverse organizational contexts before definitive conclusions can be drawn about scalability.

Technology Dependence Risks

Extensive reliance on metacognitive AI scaffolding may create learned helplessness, where users become unable to engage in creative work without AI assistance. This dependency could undermine long-term creative capacity development and organizational resilience.

These limitations suggest that metacognitive AI frameworks should be implemented thoughtfully, with careful attention to individual differences, cultural contexts, and long-term capacity building rather than short-term performance optimization.

However, the implementation of metacognitive AI frameworks is not without challenges and potential limitations that deserve careful consideration.

VII. Conclusion: The Metacognitive Future of AI Creativity

Generative AI holds profound potential—but only if we stop seeing it as an answer and start using it as a partner. Creativity does not emerge from automation alone. It emerges from the dance between cognition, emotion, and symbolic logic.

As Lu (2025) notes, "Generative AI isn’t a plug-and-play solution for creativity. To fully unlock its potential, employees must know how to engage with it—drive the tool, rather than let it drive them."

The future of AI-assisted creativity will not be won by those who prompt the fastest, but by those who think the deepest. In cultivating metacognitive, emotionally aware, and symbolically empowered users, we do not merely evolve our tools—we evolve our thinking.

That is the true frontier of creative intelligence.

APA Reference List

Baltrušaitis, T., Ahuja, C., & Morency, L. P. (2019). Multimodal machine learning: A survey and taxonomy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(2), 423–443. https://doi.org/10.1109/TPAMI.2018.2798607

Bengio, Y. (2021). From System 1 deep learning to System 2 deep learning [NeurIPS 2021 Tutorial]. https://yoshuabengio.org/wp-content/uploads/2021/12/System2DL_NeurIPS2021.pdf

Calefato, F., Lanubile, F., & Novielli, N. (2019). EmoTxt: A toolkit for emotion recognition from text. Proceedings of the 7th International Workshop on Emotion Awareness in Software Engineering (SEmotion), 1–7. https://doi.org/10.1109/SEmotion.2019.00006

Cambria, E., Poria, S., Bajpai, R., & Schuller, B. (2020). SenticNet 6: Ensemble application of symbolic and subsymbolic AI for sentiment analysis. Proceedings of the AAAI Conference on Artificial Intelligence, 34(05), 5569–5576. https://ojs.aaai.org/index.php/AAAI/article/view/6450

Chella, A., Frixione, M., & Gaglio, S. (2020). A cognitive architecture for emotion awareness in robots. Cognitive Systems Research, 59, 4–21. https://doi.org/10.1016/j.cogsys.2019.10.001

Creswell, J. D., Lindsay, E. K., & Moyers, T. B. (2023). Mindfulness interventions and the metacognitive basis for behavior change. Psychological Science in the Public Interest, 24(1), 1–39. https://doi.org/10.1177/15291006231158931

Crouse, D., Maffei, A., Yang, L., Park, N., & Tenenbaum, J. B. (2023). Neuro-symbolic concept learning from experience. Nature Machine Intelligence, 5, 504–515. https://doi.org/10.1038/s42256-023-00678-z

Filimowicz, M. (2024, December 9). Beyond the Turing Test: Unleashing the metacognitive core of AI. Medium. https://medium.com/@michaelfilimowicz/beyond-the-turing-test-unleashing-the-metacognitive-core-of-ai-14cdca697b87

Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. American Psychologist, 34(10), 906–911. https://doi.org/10.1037/0003-066X.34.10.906

Garcez, A. d., Lamb, L. C., & Gabbay, D. M. (2019). Neural-symbolic cognitive reasoning. Springer. https://link.springer.com/book/10.1007/978-3-030-00015-4

Ghosh, S., & Chollet, F. (2023). Can large language models reason about emotion? arXiv preprint arXiv:2305.09993. https://arxiv.org/abs/2305.09993

Griot, M., Hemptinne, C., Vanderdonckt, J., & Yuksel, D. (2025). Large language models lack essential metacognition for reliable medical reasoning. Nature Communications, 16, Article 642. https://doi.org/10.1038/s41467-024-45288-7

Lu, J. G., Sun, S., Li, A. Z., Foo, M. D., & Zhou, J. (2025). How and for whom using generative AI affects creativity: A field experiment. Journal of Applied Psychology. https://doi.org/10.1037/apl0001172

Marcus, G. (2020). The next decade in AI: Four steps towards robust artificial intelligence. arXiv preprint arXiv:2002.06177. https://arxiv.org/abs/2002.06177

Marcus, G., & Davis, E. (2020). Rebooting AI: Building artificial intelligence we can trust. Vintage. https://rebootingai.com/

Runco, M. A., & Acar, S. (2012). Divergent thinking as an indicator of creative potential. Creativity Research Journal, 24(1), 66–75. https://doi.org/10.1080/10400419.2012.652929

Schraw, G., & Dennison, R. S. (1994). Assessing metacognitive awareness. Contemporary Educational Psychology, 19(4), 460–475. https://doi.org/10.1006/ceps.1994.1033

Tankelevitch, L., Kewenig, V., Simkute, A., Scott, A. E., Sarkar, A., Sellen, A., & Rintel, S. (2024). The metacognitive demands and opportunities of generative AI. Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Article 680, 1–24. https://doi.org/10.1145/3613904.3642902

Zadeh, A., Liang, P. P., Poria, S., Cambria, E., & Morency, L. P. (2018). Multimodal language analysis in the wild: CMU-MOSEI dataset and interpretable dynamic fusion graph. Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL), 2236–2246. https://aclanthology.org/P18-1208/

Zhou, J., & George, J. M. (2001). When job dissatisfaction leads to creativity: Encouraging the expression of voice. Academy of Management Journal, 44(4), 682–696. https://doi.org/10.5465/3069410